Introduction

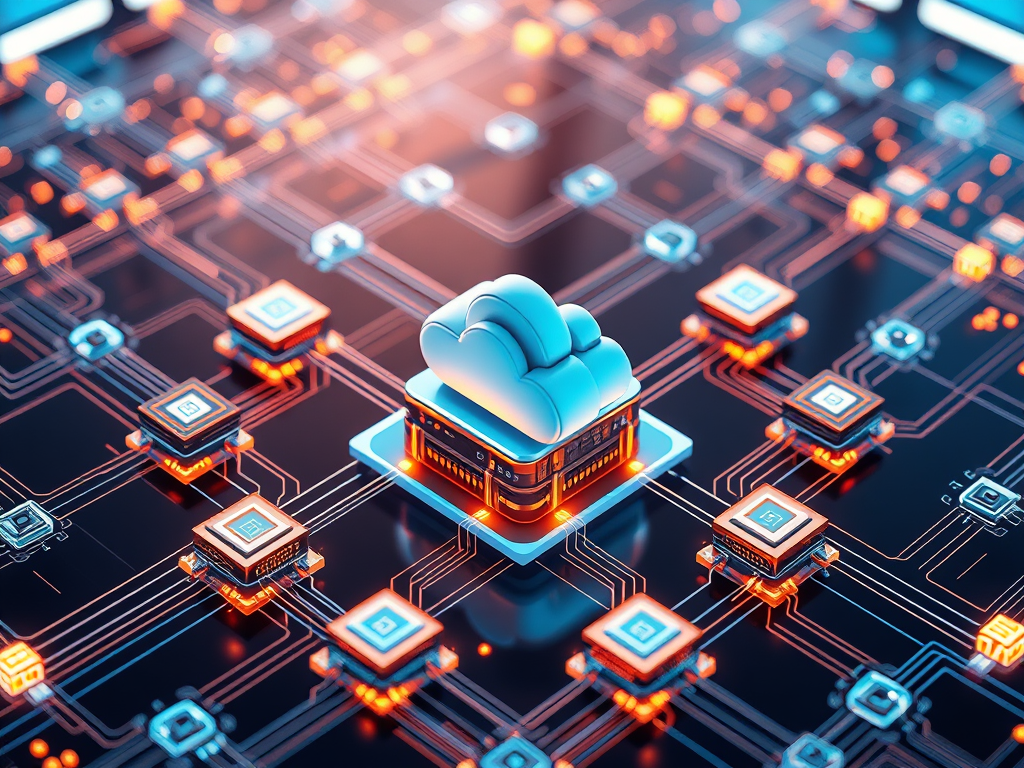

In the world of software engineering, innovation is constant. One of the most transformative trends in recent years is the rise of edge computing. As we move toward a future dominated by the Internet of Things (IoT), 5G, and real-time analytics, traditional centralized computing models are being challenged by the need for faster, more efficient, and scalable solutions. Edge computing is emerging as a powerful answer to these challenges, fundamentally redefining how applications are architected.

What is Edge Computing?

Edge computing refers to the practice of processing data closer to the source of data generation rather than relying on centralized data centers. By moving computation and data storage to the “edge” of the network, edge computing reduces latency, conserves bandwidth, and improves the reliability of applications.

Why Traditional Architectures Are Struggling

Traditional cloud-centric architectures rely heavily on centralized servers to process and analyze data. While effective for many use cases, these architectures face significant challenges in scenarios requiring:

- Low Latency: Applications like autonomous vehicles, real-time gaming, and augmented reality demand near-instantaneous responses.

- Bandwidth Efficiency: IoT devices generate massive amounts of data, making it costly and inefficient to transmit all this data to centralized servers.

- Reliability: A single point of failure in centralized systems can disrupt services for millions of users.

The Core Principles of Edge Architecture

To address these limitations, edge computing introduces architectural principles designed for decentralization:

- Distributed Computing: Workloads are distributed across multiple edge nodes located closer to the end-users or devices.

- Localized Data Processing: Data is processed at or near its source, reducing the need for constant communication with central servers.

- Interoperability: Seamless integration between edge devices, local servers, and the cloud ensures flexibility and scalability.

- Security at the Edge: Protecting sensitive data closer to its source minimizes exposure during transmission.

The Core Principles of Edge Architecture

To address these limitations, edge computing introduces architectural principles designed for decentralization:

- Distributed Computing: Edge computing decentralizes processing by distributing workloads across multiple edge nodes, reducing dependency on central servers. This principle ensures that applications can function smoothly even under high demand, as computation is handled closer to where the data originates.

- Localized Data Processing: Processing data near its source minimizes latency and reduces the volume of data sent to central servers. For example, an IoT sensor in a factory can preprocess data on-site, sending only critical insights to the cloud.

- Interoperability: A robust edge architecture ensures seamless integration between various components, including edge devices, gateways, and cloud systems. This flexibility enables applications to scale and adapt to new technologies or requirements with minimal reconfiguration.

- Security at the Edge: With data processed closer to its source, sensitive information is less exposed during transmission. Edge architectures emphasize encryption, secure access controls, and real-time threat detection to safeguard data.

- Resilience Through Redundancy: Edge nodes are designed to operate independently, ensuring that critical functionalities remain accessible even if connectivity to the central server is lost. This redundancy enhances system reliability, particularly in mission-critical applications.

- Real-Time Decision Making: By processing data locally, edge computing supports instantaneous decision-making, which is vital for applications like autonomous vehicles, healthcare monitoring, and smart grids. These decisions rely on localized computation rather than waiting for cloud-based analysis.

- Scalability Through Modularity: Edge architectures often employ modular components such as containerized services and microservices. This approach allows developers to update, scale, or replace specific functionalities without disrupting the entire system.

- Energy Efficiency: Localized processing reduces the need for continuous data transmission to centralized servers, conserving energy. Additionally, edge devices are optimized for low-power operation, making them ideal for remote or resource-constrained environments.

Key Components of Edge Application Architecture

- Edge Devices: These are the primary data-generating and processing units, such as IoT sensors, cameras, and wearable devices. Edge devices are responsible for collecting raw data and often performing initial computations, like filtering or compressing data. Examples include smart thermostats, industrial sensors, and fitness trackers. Many of these devices run lightweight operating systems to support edge functionalities.

- Edge Gateways: Serving as intermediaries between edge devices and higher layers of the architecture, edge gateways aggregate data from multiple devices, perform preprocessing, and communicate with cloud or fog nodes. These gateways often include capabilities like protocol translation, security enforcement, and localized data analytics. For instance, in a smart home setup, an edge gateway can coordinate devices like cameras, lights, and sensors to work seamlessly together.

- Fog Nodes: Positioned between edge devices and cloud systems, fog nodes provide intermediate processing and storage capabilities. These nodes reduce the computational burden on both edge devices and central servers, enabling more complex analytics and machine learning tasks to be performed closer to the data source. Fog nodes are particularly useful in industrial IoT applications, where large volumes of data must be processed in near real time.

- Cloud Integration: While edge and fog layers handle localized processing, cloud systems remain essential for long-term storage, advanced analytics, and centralized management. Edge architectures integrate with cloud platforms to provide global insights, backup, and redundancy. For instance, in autonomous vehicle systems, while edge nodes handle immediate decisions, the cloud aggregates data for training improved machine learning models.

- Networking Components: Reliable communication infrastructure is crucial for edge computing. Networking components include routers, switches, and 5G towers that ensure data flows efficiently between edge devices, gateways, and the cloud. Emerging technologies like software-defined networking (SDN) enable dynamic optimization of data routes, improving the overall performance of edge systems.

- Edge-Oriented Software Platforms: Software platforms designed for edge computing provide developers with tools to deploy, manage, and update applications across distributed environments. Examples include container orchestration systems like Kubernetes tailored for edge environments or IoT platforms such as AWS IoT Greengrass and Azure IoT Edge.

- Security Frameworks: To address the expanded attack surface introduced by distributed architectures, security frameworks at the edge include end-to-end encryption, device authentication, and intrusion detection systems. These frameworks ensure data integrity and confidentiality across all layers of the architecture.

- Power Management Systems: Since edge nodes often operate in remote or resource-constrained environments, power management systems optimize energy consumption. These include solar-powered devices, low-power processors, and mechanisms to hibernate inactive components without affecting overall functionality.

Real-World Applications of Edge Computing

- Autonomous Vehicles: Edge computing enables real-time decision-making by processing data from sensors and cameras locally.

- Smart Cities: Traffic lights, environmental sensors, and surveillance systems rely on edge nodes to process data in real time.

- Healthcare: Wearable devices and remote monitoring systems use edge computing to analyze patient data quickly.

- Retail: Edge computing powers personalized customer experiences and inventory management through local data processing.

- Industrial IoT: Factories leverage edge nodes to monitor machinery, detect anomalies, and optimize operations.

Challenges in Edge Computing Adoption

- Infrastructure Costs: Deploying and maintaining edge nodes can be expensive.

- Security Concerns: Decentralized architectures increase the attack surface.

- Data Management: Ensuring consistency and synchronization across distributed nodes can be complex.

- Skill Gap: Designing and managing edge architectures require specialized knowledge.

Best Practices for Designing Edge Architectures

- Prioritize Use Cases: Begin by identifying specific applications where edge computing will provide the most value. Focus on latency-sensitive, bandwidth-intensive, and real-time decision-making use cases to ensure resources are allocated effectively.

- Adopt Modular Design: Use microservices, containerization, and APIs to create a modular edge architecture. Modular systems allow individual components to be updated or replaced without affecting the entire system, promoting flexibility and scalability.

- Implement Robust Security: Secure your edge architecture with multi-layered security protocols. Employ end-to-end encryption, device authentication, intrusion detection systems, and regular vulnerability assessments to mitigate risks. Adopt a zero-trust security model to minimize potential breaches.

- Optimize Data Flow: Minimize data transmission by using data filtering, aggregation, and caching mechanisms at the edge. Only send critical or pre-processed data to central servers, reducing bandwidth usage and improving efficiency.

- Leverage Edge-Oriented Tools: Utilize specialized platforms like AWS IoT Greengrass, Azure IoT Edge, or Google Cloud IoT to simplify deployment, management, and updates of edge applications. These platforms provide pre-built tools and services to accelerate development.

- Design for Resilience: Ensure edge nodes can operate independently during network outages. Incorporate failover mechanisms and redundancy to maintain critical functions even if the connection to central servers is lost.

- Standardize Communication Protocols: Use widely adopted communication protocols (e.g., MQTT, CoAP, OPC UA) to ensure compatibility between diverse devices and systems. Standardization reduces integration complexity and enhances scalability.

- Monitor and Manage Remotely: Deploy robust monitoring tools to track the performance, health, and security of edge nodes. Use automated management solutions to reduce manual intervention and improve operational efficiency.

- Emphasize Energy Efficiency: Select energy-efficient hardware and software solutions to reduce power consumption. This is especially critical for edge nodes deployed in remote or resource-constrained environments.

- Collaborate Across Teams: Foster collaboration between software engineers, network architects, and cybersecurity experts to design a comprehensive edge architecture. Cross-disciplinary input ensures all critical aspects are addressed.

The Future of Edge Computing

The integration of edge computing with technologies like AI, machine learning, and 5G is set to revolutionize application architecture further. As hardware continues to advance and software engineers innovate, edge computing will likely become a cornerstone of modern systems, enabling smarter, faster, and more resilient applications.

Conclusion

Edge computing is not just a technological shift; it’s a paradigm change in how we approach application architecture. By addressing the challenges of traditional systems, it empowers software engineers to create innovative, efficient, and future-ready solutions. As edge computing continues to evolve, staying ahead of its trends and best practices will be crucial for any software engineering professional.

Leave a comment