Imagine joining your morning stand-up and realizing half the “team” doesn’t breathe, doesn’t sleep, and shipped three pull requests while you were brushing your teeth.

This is not a sci‑fi scenario. This is already happening.

Software engineering is quietly undergoing its biggest structural shift since the invention of high-level programming languages. Not cloud. Not DevOps. Not even Agile.

The real change? AI agents are becoming first-class coworkers.

Not tools.

Not copilots.

Coworkers.

This article is not about prompt engineering. It’s not about using ChatGPT better. And it’s not another “AI will replace developers” hot take.

This is about something deeper and far more disruptive:

Software engineering is evolving from human-centered execution to human‑orchestrated intelligence systems.

If you’re a developer, tech lead, architect, or engineering manager, this shift will redefine:

- How code is written

- How teams are structured

- How performance is measured

- How careers are built

And most importantly:

Those who learn to design systems with AI agents will leapfrog those who merely use them.

Let’s break it down — from first principles.

🧠 What Exactly Is an AI Agent (And Why This Is Different)

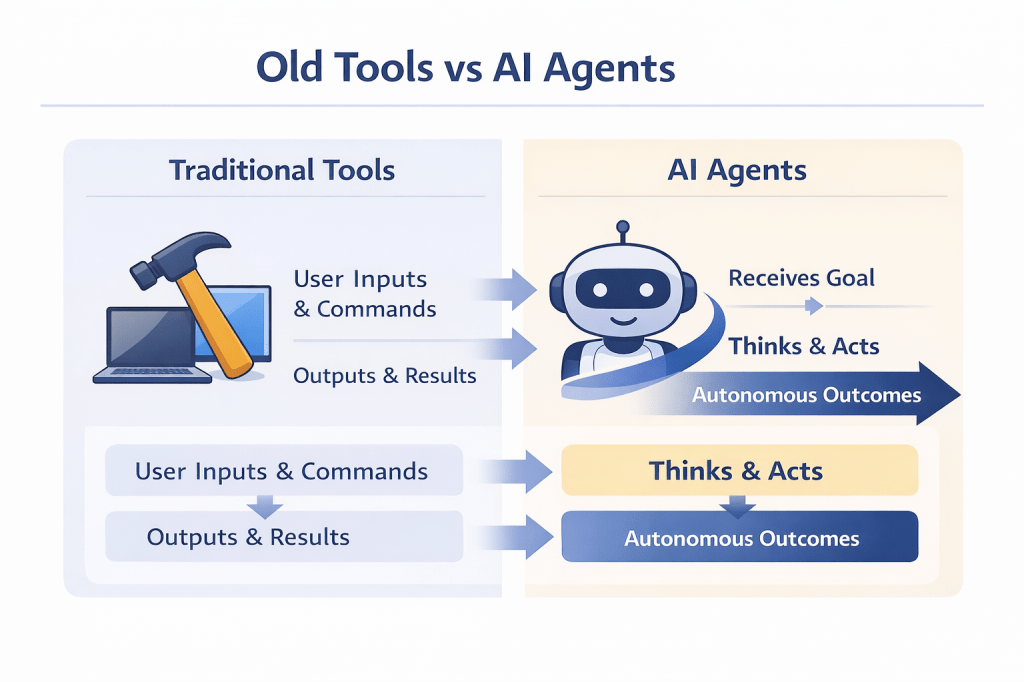

📊 Diagram 1: Tool vs AI Agent (Mental Model)

This diagram highlights the fundamental shift: tools wait, agents act.

An AI agent is not just a chatbot.

An AI agent:

- Has a goal

- Can plan steps to achieve it

- Can use tools (APIs, IDEs, CI/CD, browsers)

- Can observe results and adapt

- Can run continuously or asynchronously

In other words, an AI agent behaves like a junior-to-mid-level engineer who never gets tired.

Tool vs Agent: The Mental Shift

| Tool | Agent |

|---|---|

| Reacts to commands | Acts toward goals |

| Single interaction | Persistent state |

| User-driven | Self-directed within constraints |

| Disposable | Accountable |

This distinction matters because engineering workflows are built around humans, not autonomous collaborators.

And that’s the problem.

🏗️ Software Engineering Was Built for Humans

Let’s be honest: most engineering practices exist to compensate for human limitations.

- Agile → humans forget priorities

- Code reviews → humans make mistakes

- Standups → humans lose alignment

- Documentation → humans leave teams

- CI checks → humans forget edge cases

Now introduce AI agents that:

- Don’t forget

- Don’t context-switch

- Don’t burn out

- Don’t get defensive in code reviews

Suddenly, many of our assumptions break.

We’re running 21st-century intelligence on 20th-century processes.

🧩 The Rise of the Hybrid Engineering Team

📊 Diagram 2: Hybrid Engineering Team Structure

Humans define direction. Agents scale execution.

The future engineering team is not:

- All humans

- All AI

It’s hybrid by default.

A Realistic Near-Future Team

- Human engineers: strategy, architecture, ethics, judgment

- AI agents: implementation, refactoring, testing, monitoring

Think of agents as:

- Perpetual junior engineers

- Hyper-fast QA specialists

- Tireless documentation writers

- Obsessive refactoring machines

The competitive advantage won’t come from having AI agents.

It will come from organizing them effectively.

🧑💻 New Engineering Roles You Haven’t Heard Of (Yet)

Just as DevOps created new roles, AI agents will too.

1. Agent Architect

Designs:

- Agent responsibilities

- Boundaries and permissions

- Failure modes

This role is about system design, not prompts.

2. Agent Operations Engineer (AgentOps)

Responsible for:

- Monitoring agent behavior

- Cost control

- Drift detection

- Output validation

Think SRE, but for intelligence.

3. Human-in-the-Loop Engineer

Defines:

- When humans intervene

- Approval thresholds

- Escalation logic

This role protects against silent failures.

🔄 From Pull Requests to Intent Pipelines

📊 Diagram 3: Workflow Evolution

Engineering shifts from managing steps to managing outcomes.

Traditional workflow:

Ticket → Code → Review → Merge → Deploy

Agent-native workflow:

Intent → Decomposition → Parallel Execution → Validation → Deployment

Instead of assigning tasks, engineers define intent:

“Improve API latency by 30% without breaking backward compatibility.”

Agents:

- Analyze bottlenecks

- Propose changes

- Implement alternatives

- Run experiments

Humans:

- Approve direction

- Review trade-offs

- Own accountability

This is a fundamental shift.

⚠️ Why Most Teams Will Get This Wrong

Here’s the uncomfortable truth:

Most organizations will simply bolt AI onto existing workflows.

They will:

- Use agents like faster interns

- Trust outputs blindly

- Ignore systemic risks

And they will fail quietly.

Failure Mode #1: Automation Without Understanding

Agents amplify mistakes faster than humans.

Failure Mode #2: No Ownership

When an agent breaks production, who is responsible?

Failure Mode #3: Skill Atrophy

Over-delegation leads to engineers who can’t reason about their own systems.

The winners will be deliberate.

🛡️ Guardrails: Engineering for AI Safety (Practically)

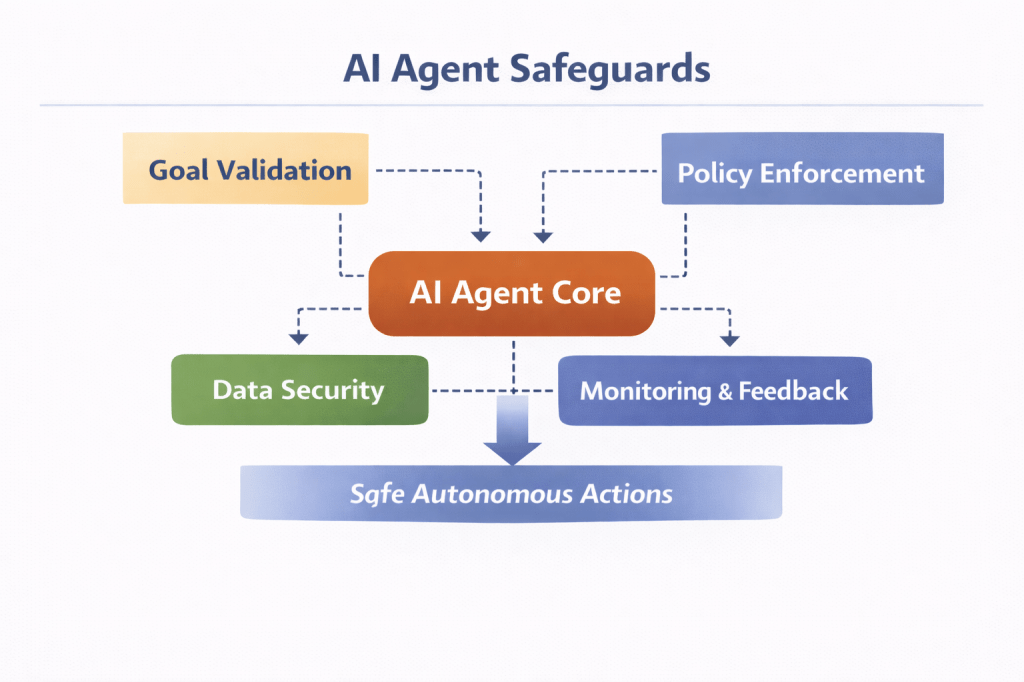

📊 Diagram 4: AI Agent Guardrail Architecture

Guardrails ensure agents are powerful and safe.

Forget abstract AI ethics.

Here’s what actually matters in engineering teams:

1. Capability Boundaries

Agents should:

- Have limited permissions

- Operate in sandboxes

- Require approval for irreversible actions

2. Deterministic Validation

Every agent action should be:

- Testable

- Reproducible

- Auditable

3. Cost and Resource Controls

Unbounded agents burn money silently.

📈 Measuring Productivity in an Agent-First World

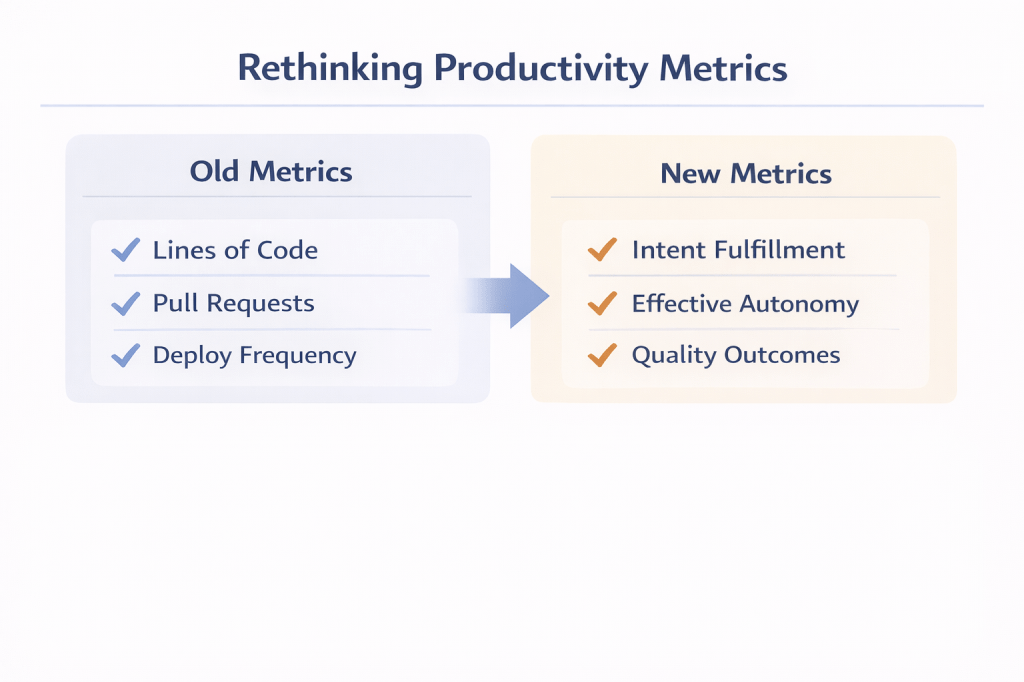

📊 Diagram 5: Productivity Signal Shift

As agents scale execution, human value moves up the abstraction ladder.

Lines of code were a bad metric.

Story points were worse.

Now they’re meaningless.

New Metrics That Matter

- Intent success rate

- Human intervention frequency

- Time-to-decision

- Failure containment radius

Engineering leaders who don’t adapt metrics will lose visibility.

🎓 What This Means for Your Career

Let’s be blunt.

Skills That Will Decline in Value

- Syntax memorization

- Boilerplate-heavy coding

- Manual test writing

Skills That Will Explode in Value

- System design

- Problem framing

- Trade-off analysis

- Architecture judgment

- Ethical reasoning

The best engineers won’t code less.

They’ll code more intentionally.

🧠 A Mental Model Shift You Must Make

Stop asking:

“How can AI help me write code faster?”

Start asking:

“How do I design systems where intelligence compounds?”

This is the same shift that separated:

- Scripters from engineers

- Engineers from architects

- Architects from technical leaders

🧪 A Simple Experiment You Can Run This Week

- Pick a non-trivial refactoring task

- Define the intent, not the steps

- Let an AI agent propose multiple approaches

- Compare trade-offs

- You decide

Notice where your judgment mattered.

That’s your future value.

🔮 The Long-Term Bet

In five years:

- Teams without agents will feel slow

- Engineers without orchestration skills will plateau

- Organizations without governance will face disasters

This isn’t optional.

It’s structural.

🧭 Final Thought

Software engineering has always been about managing complexity.

AI agents don’t remove complexity.

They move it.

From keystrokes to decisions.

From syntax to systems.

From execution to intent.

The engineers who win won’t fight this shift.

They’ll design it.

Leave a comment